This post looks at the main difficulties faced while using a classifier to block attacks: handling mistakes and uncertainty such that the overall system remains secure and usable.

At a high level, the main difficulty faced when using a classifier to block attacks is how to handle mistakes. The need to handle errors correctly can be broken down into two challenges: how to strike the right balance between false positives and false negatives, to ensure that your product remains safe when your classifier makes an error; and how to explain why something was blocked, both to inform users and for debugging purposes. This post explore those two challenges in turn.

This post is the third post in a series of four that is dedicated to providing a concise overview of how to use artificial intelligence (AI) to build robust anti-abuse protections. The first post explains why AI is key to build robust anti-defenses that keep up with user expectations and increasingly sophisticated attackers. Following the natural progression of building and launching an AI-based defense system, the second post covers the challenges related to training and the fourth and final post looks at how attackers go about attacking AI-based defenses.

This series of posts is modeled after the talk I gave at RSA 2018. Here is a re-recording of this talk:

You can also get the slides here.

Disclaimer: This series is intended as an overview for everyone interested in the subject of harnessing AI for anti-abuse defense, and it is a potential blueprint for those who are making the jump. Accordingly, this series focuses on providing a clear high-level summary, deliberately not delving into technical details. That being said, if you are an expert, I am sure you will find ideas and techniques that you haven’t heard about before, and hopefully you will be inspired to explore them further.

Let’s get started!

Striking the right balance between false positives and false negatives

The single most important decision you have to make when putting a classifier in production is how to balance your classifier error rates. This decision deeply affects the security and usability of your system. This struggle is best understood through a real-life example, so let’s start by looking at one of the toughest: the account recovery process.

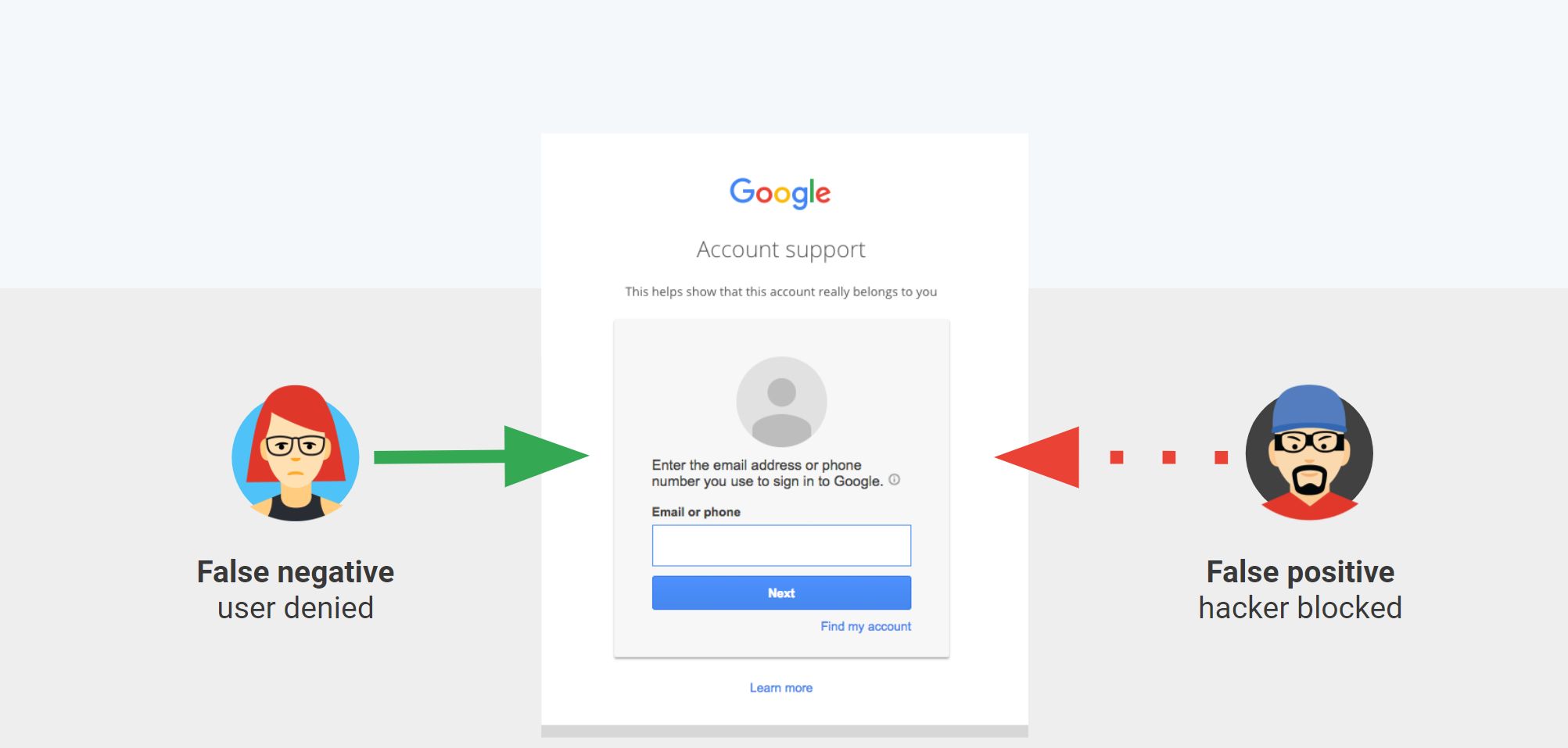

When a user loses access to their account, they have the option to go through the account recovery process, supply information to prove they are who they claim to be, and get their account access back. At the end of the recovery process the classifier has to decide, based on the information provided and other signals, whether or not to let the person claiming to be the user recover the account.

The key question here is what the classifier should do when it is not clear what the decision should be. Technically, this is done by adjusting the false positive and false negative rates; this is also known as classifier sensitivity and specificity. There are two options:

- Make the classifier cautious, which is to favor reducing false positives (hacker break-in) at the expense of increasing false negatives (legitimate user denied).

- Make the classifier optimistic, which is to favor reducing false negatives (legitimate user denied) at the expense of increasing false positives (hacker break-in)

While both types of error are bad, it is clear that for account recovery, letting a hacker break into a user’s account is not an option. Accordingly, for that specific use case, the classifier must be tuned to err on the cautious side. Technically, this means we are willing to reduce the false positive rate at the expense of slightly increasing the false negative rate.

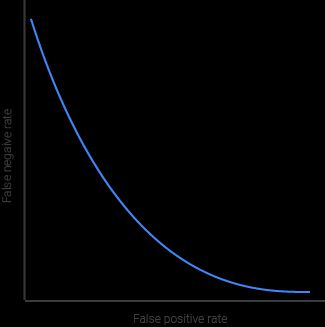

It is important to note that the relation between a false positive and a false negative is not linear, as illustrated in the figure above. In practical terms, this means that the more you reduce one at the expense of the other, the higher your overall error rate will be. There is no free lunch :-)

For example, you might be able to reduce the false negative rate from 0.3% to 0.2% by slightly increasing the false positive rate from 0.3% to 0.42%. However, reducing the false negative rate further, to 0.1%, will increase your false positive rate to a whopping 2%. Those numbers are made up but they do illustrate how the nonlinear relation that exists between false positives and false negatives plays out.

To sum up, the first challenge faced when using classifiers in production to detect attack is that:

In fraud and abuse the margin for error is often nonexistent, and some error types are more costly than others.

This challenge is addressed by paying extra attention to how classifier error rates are balanced, to ensure that your systems are as safe and usable as possible. Here are three key points you need to consider when balancing your classifier:

- Use manual reviews:When the stakes are high and the classifier is not confident enough, it might be worth relying on a human to make the final determination.

- Adjust your false positive and false negative rates: Skew your model errors in one direction or the other, adjusting it so that it errs on the correct side to keep your product safe.

- Implement catch-up mechanisms: No classifier is perfect, so implementing catch-up mechanisms to mitigate the impact of errors is important. Catch-up mechanisms include an appeal system and in-product warnings.

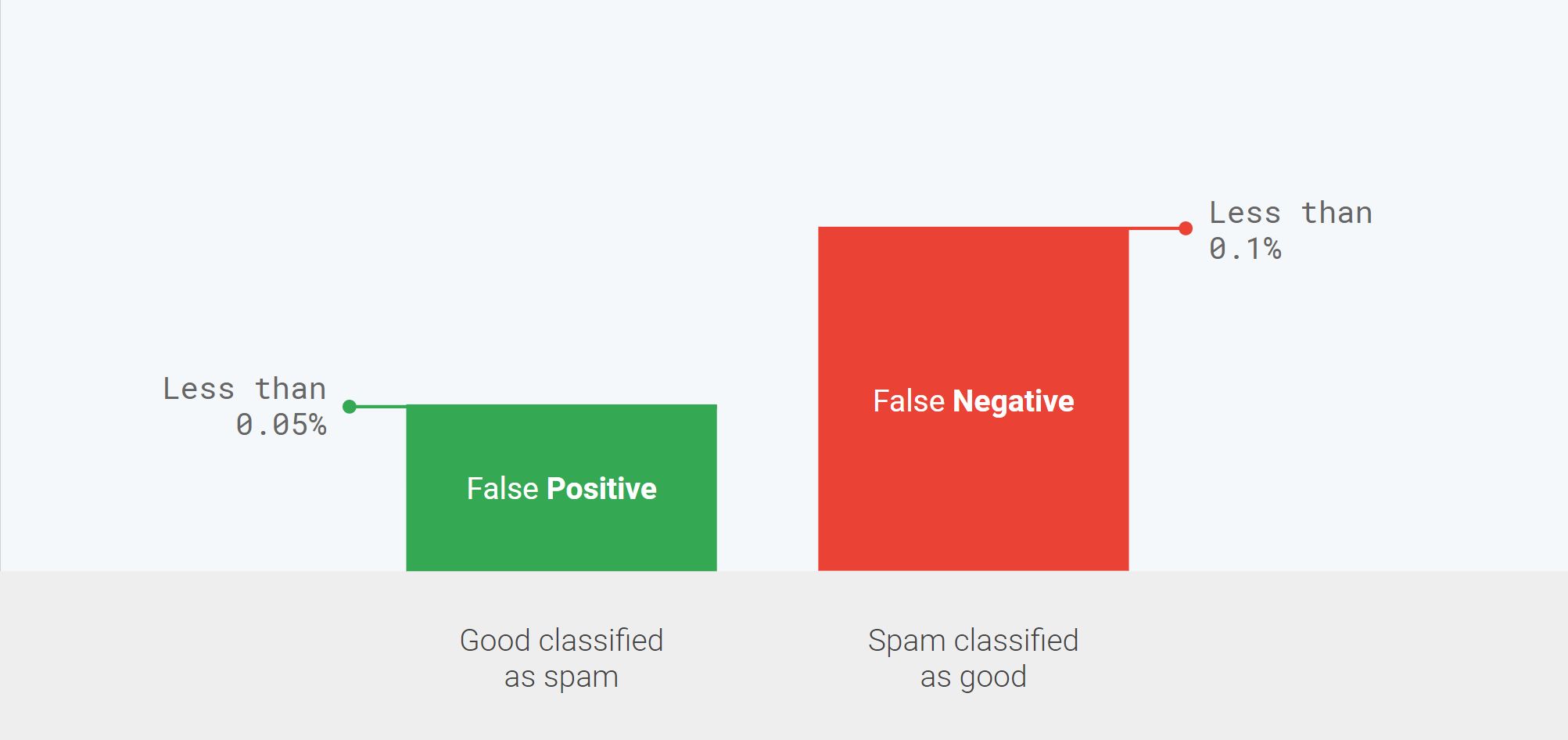

To wrap up this section, let’s consider how Gmail spam classifier errors are balanced.

Gmail users really don’t want to miss an important email but are okay with spending a second or two to get rid of spam in their inboxes, provided it doesn’t happen too often. Based on this insight, we made the conscious decision to bias the Gmail spam classifier to ensure that the false positives rate (which means good emails that end up in the spam folder) is as low as possible. Reducing the false positives rate to 0.05% is achieved at the expense of a slightly higher false negatives rate (which means spam in user inboxes) of 0.1%.

Predicting is not explaining

Our second challenge is that being able to predict if something is an attack does not mean you are able to explain why it was detected.

Fundamentally, dealing with attacks and abuse attempts is a binary decision: you either block something or you don’t. However, in many cases, especially when the classifier makes an error, your users will want to know why something was blocked. Being able to explain how the classifier reached a particular decision requires additional information that must be gathered through additional means.

Here are three potential directions that will allow you to collect the additional information you need to explain your classifier’s decisions.

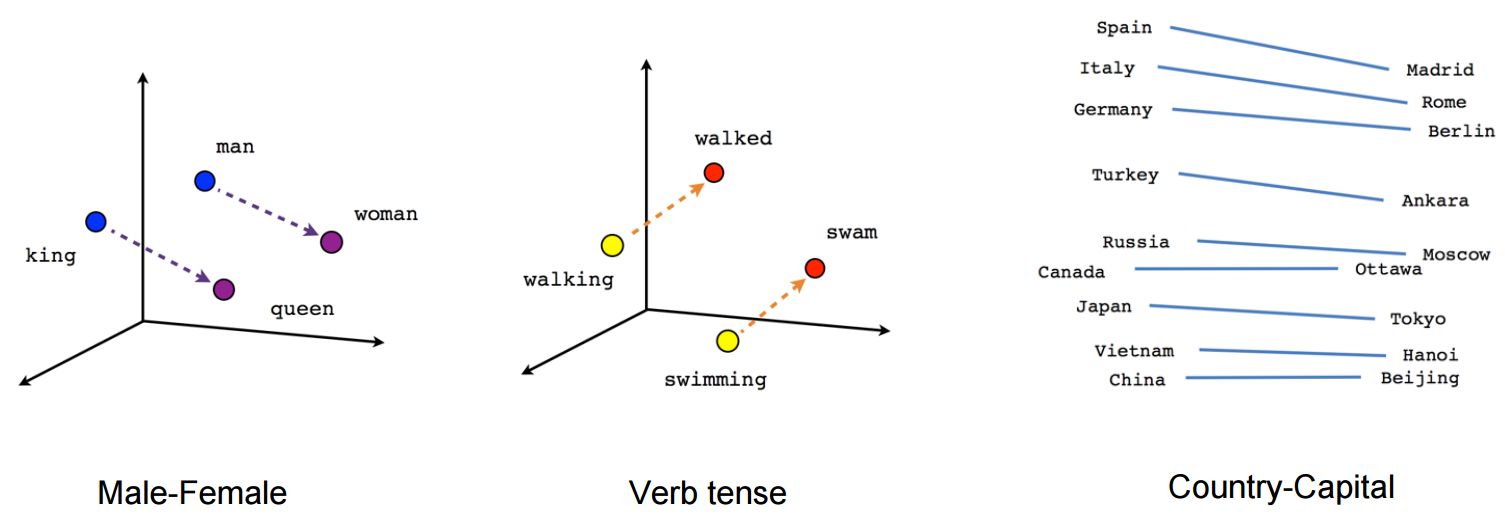

1. Use similarity to known attacks

First you can look at how similar a given blocked attack is to known attacks. If it is very similar to one of them, then it is very likely that the blocked attack was a variation of it. Performing this type of explanation is particularly easy when your model uses embedding because you can directly apply distance computation to those embedding to find related items. This has been applied successfully to words and faces for example.

2. Train specialized models

Instead of having a single model that classifies all attacks, you can use a collection of more specialized models that target specific classes of attacks. Splitting the detection into multiple classifiers makes it easier to attribute a decision to a specific attack class because there is a one-to-one mapping between the attack type and the classifier that detected it. Also, in general, specialized models tend to be more accurate and easier to train, so you should rely on those if you can.

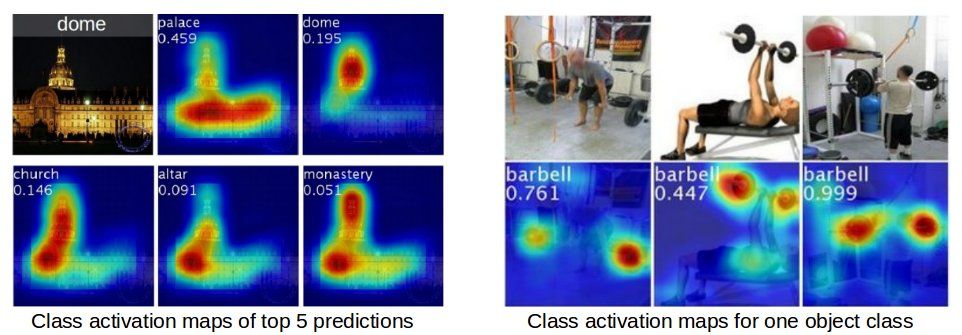

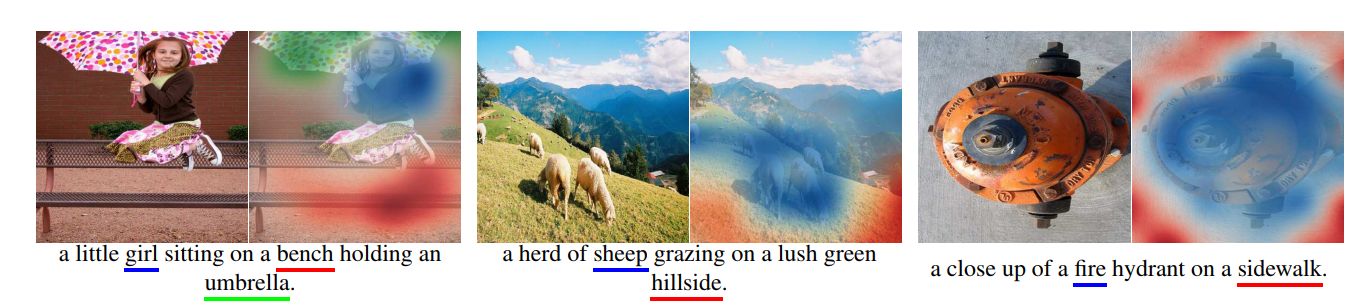

3. Leverage model explainability

Last but not least, you can analyze the inner state of the model to glean insights about why a decision was made. As you can see in the screenshot above, for example, a class-specific saliency map (see this recent paper) helps us to understand which section of the image contributed the most to the decision. Model explainability is a very active field of research and a lot of great tools, techniques and analysis have been released recently.

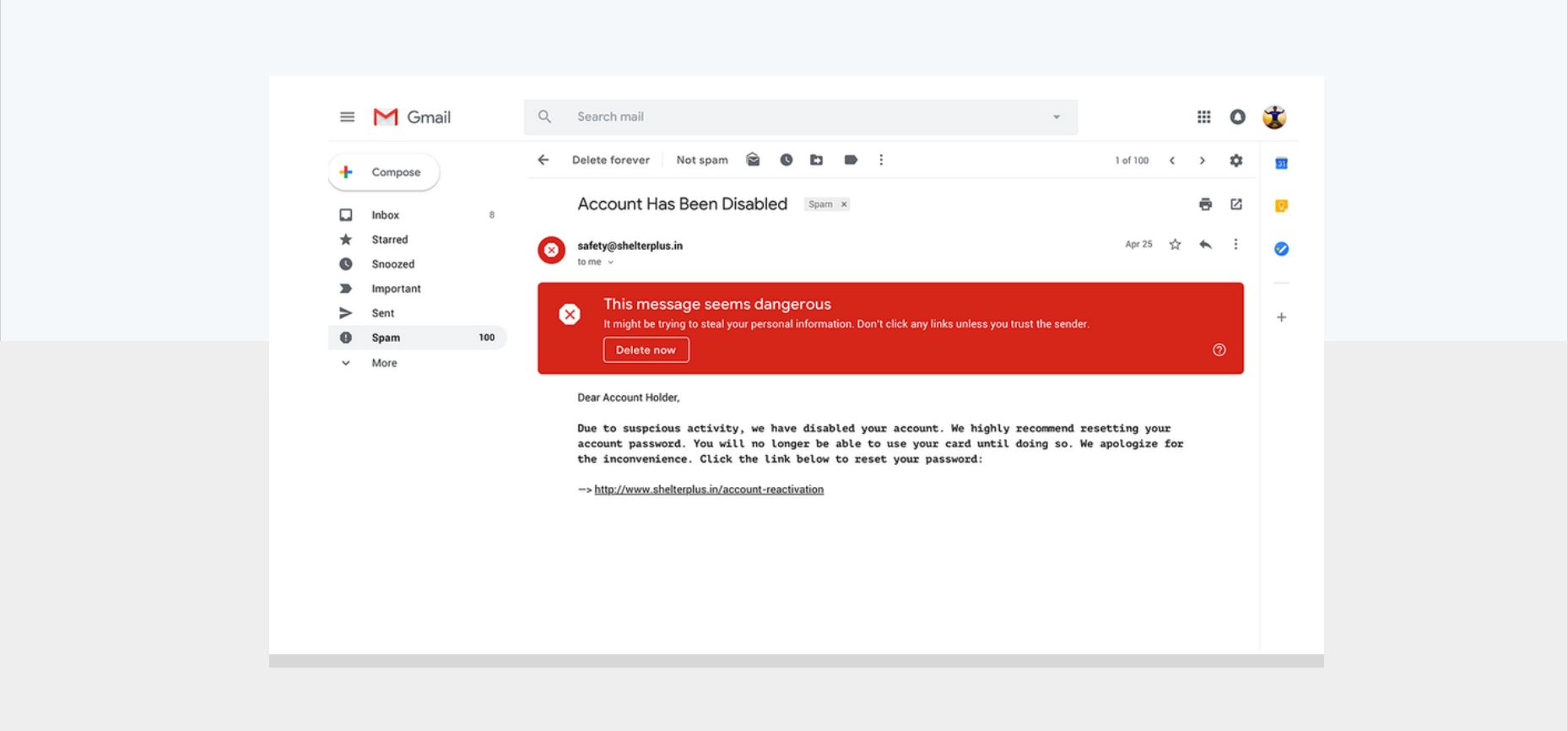

Gmail as an example

Gmail makes use of explainability to help users understand better why something is in the spam folder and why it is dangerous. As visible in the screenshot above, on top of each a spam email we add a red banner that explains why the email is dangerous, and does so in simple terms meant to be understandable to every user.

Conclusion

Overall this post can be summarized as follow:

Successful applying AI to abuse fighting requires to handle classifier errors is a safe way and be able to understand how a particular decision was reached.

The next post of the serie discusses the various attacks against classifiers and how to mitigate them.

Thank you for reading this post till the end! If you enjoyed it, don’t forget to share it on your favorite social network so that your friends and colleagues can enjoy it too and learn about AI and anti-abuse.

To get notified when my next post is online, follow me on Twitter, Facebook, Google+, or LinkedIn. You can also get the full posts directly in your inbox by subscribing to the mailing list or via RSS.

A bientôt!