This post explains why artificial intelligence (AI) is the key to building anti-abuse defenses that keep up with user expectations and combat increasingly sophisticated attacks. This is the first post of a series of four posts dedicated to provide a concise overview of how to harness AI to build robust anti-abuse protections.

The remaining three posts delve into the top 10 anti-abuse specific challenges encountered while applying AI to abuse fighting, and how to overcome them. Following the natural progression of building and launching an AI-based defense system, the second post covers the challenges related to training, the third post delves into classification issues and the fourth and final post looks at how attackers go about attacking AI-based defenses.

This series of posts is modeled after the talk I gave at RSA 2018. Here is a re-recording of this talk:

You can also get the slides here.

Disclaimer: This series is meant to provide an overview for everyone interested in the subject of harnessing AI for anti-abuse defense, and it is a potential blueprint for those who are making the jump. Accordingly, this series focuses on providing a clear high-level summary, purposely avoiding delving into technical details. That being said, if you are an expert, I am sure you will find ideas and techniques that you haven’t heard about before, and hopefully you will be inspired to explore them further.

Let’s kickoff this series with a motivating example.

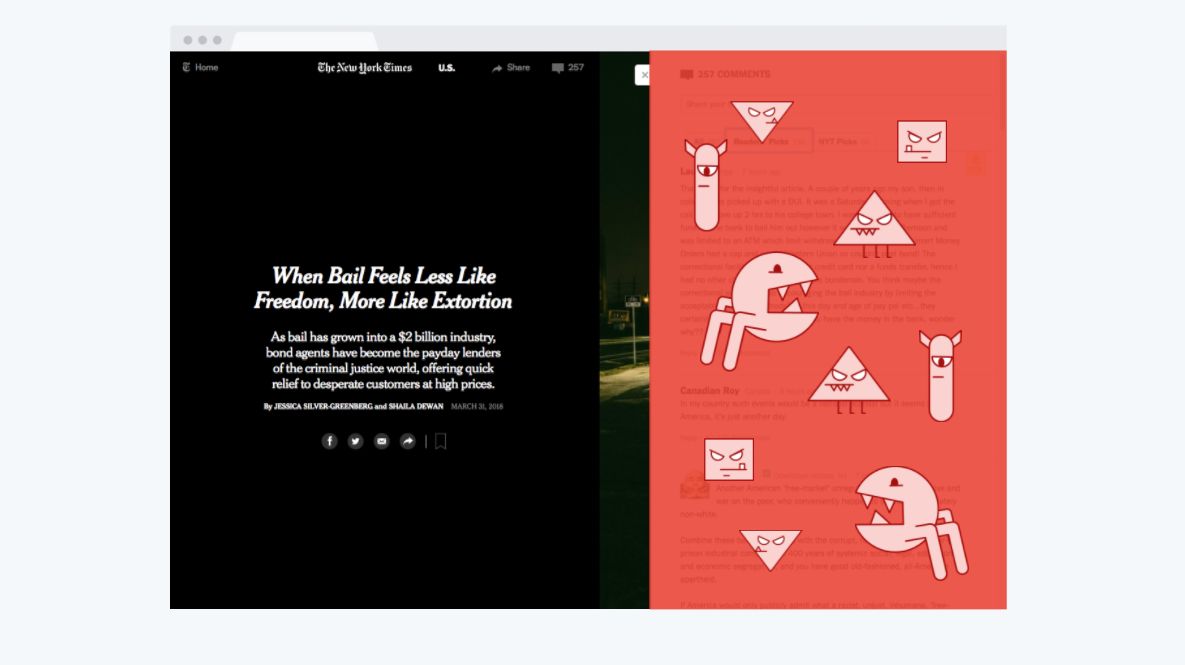

I am an avid reader of The New York Times, and one of my favorite moments on the site is when I find a comment that offers an insightful perspective that helps me better understand the significance of the news reported. Knowing this, you can imagine my unhappiness, back in September 2017, when The New York Times announced its decision to close the comment sections because it couldn’t keep up with the trolls that rentelessy attempted to derail the conversation :-(

This difficult decision created a backlash from their readership, which felt censored and didn’t understand the reasoning behind it. This led The New York Times to go on record a few days after to explain that it couldn’t keep up with the troll onslaught and felt it had no other choice than closing the comments, in order to maintain the quality of the publication.

Conventional protections are failing

The New York Times case is hardly an exception. Many other publications have disallowed comments due to trolling. More generally, many online services, including games and recommendation services, are struggling to keep up with the continuous onslaught of abusive attempts. These struggles are the symptom of a larger issue:

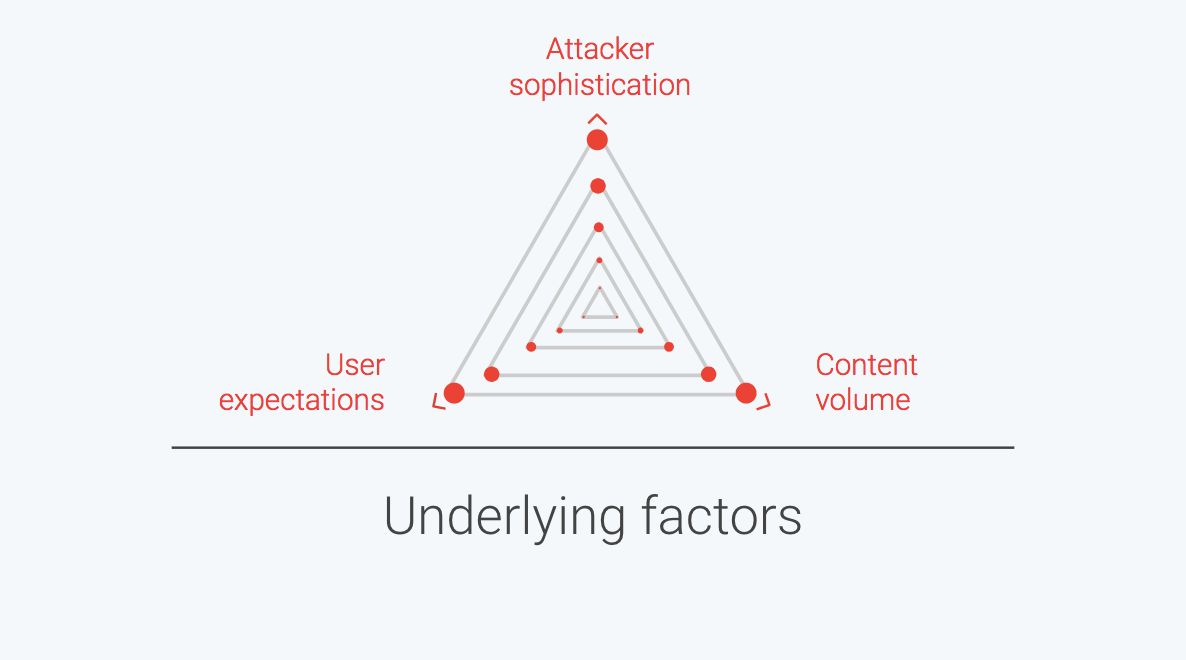

Three major underlying factors contribute to the failure of conventional protections:

- User expectations and standards have dramatically increased. These days, users perceive the mere presence of a single abusive comment, spam email or bad images as a failure of the system to protect them.

- The amount and diversity of user-generated content has exploded. Dealing with this explosion requires anti-abuse systems to scale up to cover a large volume of diverse content and a wide range of attacks.

- Attacks have become increasingly sophisticated. Attackers never stop evolving, and online services are now facing well-executed, coordinated attacks that systematically attempt to target their defense’s weakest points.

AI is the way forward

So, if conventional approaches are failing, how do we build anti-abuse protection that is able to keep up with those ever-expanding underlying factors? Based on our experience at Google, I argue that:

AI is key to building protections that keep up with user expectations and combat increasingly sophisticated attacks.

I know! The word AI is thrown around a lot these days, and skepticism surrounds it. However, as I am going to explain, there are fundamental reasons why AI is currently the best technology to build effective anti-fraud and abuse protections.

AI to the rescue of The NYT

Before delving into those fundamental reasons, let’s go back to The New York Times story so I can tell you how it ended.

The New York Times story has an awesome ending: not only were the comments reopened, but they were also extended to many more articles.

What made this happy ending possible, under the hood, is an AI system developed by Google and Jigsaw that empowered The NYT to scale up its comment moderation.

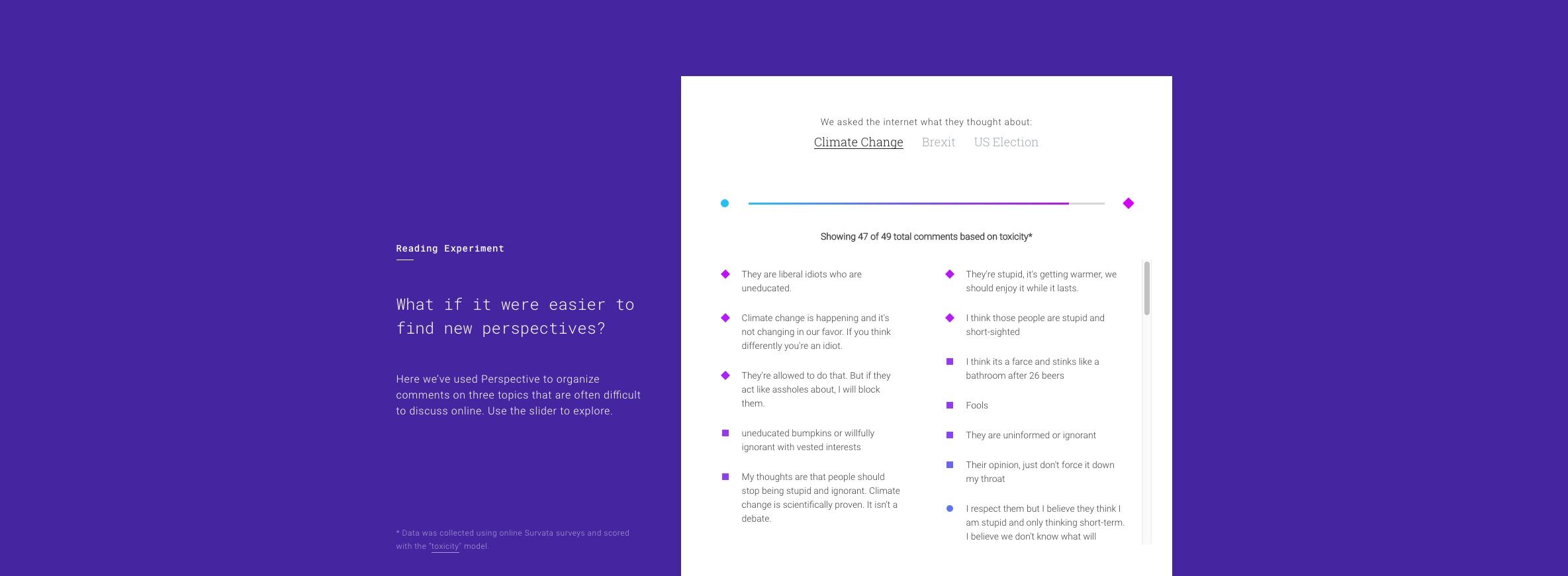

This system, called Perspective API, leverages deep learning to assign a toxicity score to the 11,000 comments posted daily on The New York Times site. The NYT comments review team leverages those scores to scale up by only focusing on the potentially toxic comments. Since its release, many websites have adopted Perspective API, including Wikipedia and The Guardian.

The fundamental reasons behind the ability of AI to combat abuse

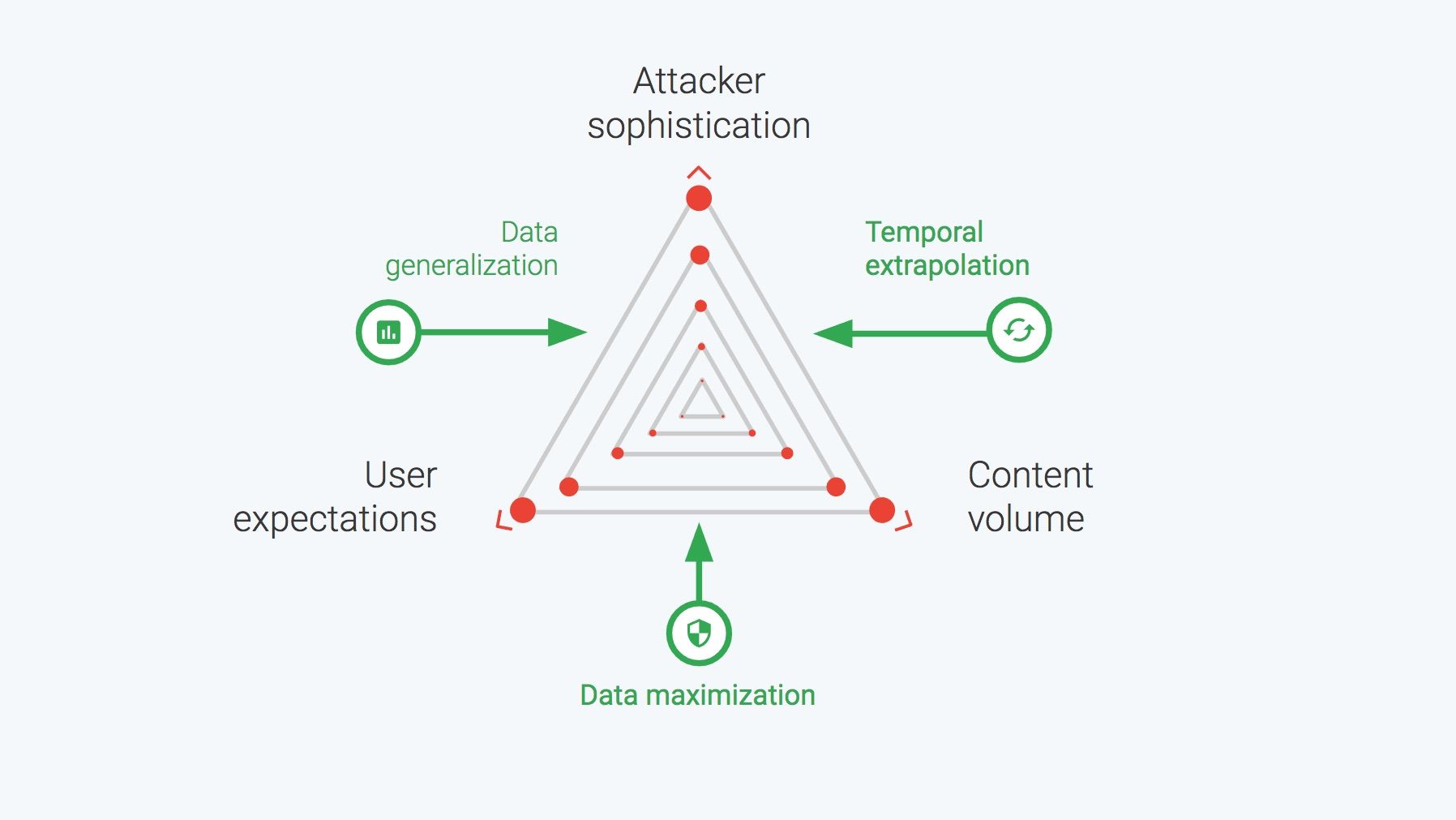

Fundamentally, AI allows to build robust abuse protections because it is able to do the following better than any other systems:

- Data generalization: Classifiers are able to accurately block content that matches ill-defined concepts, such as spam, by generalizing efficiently from their training examples.

- Temporal extrapolation: AI systems are able to identify new attacks based on the ones observed previously.

- Data maximization: By nature, an AI is able to optimally combine all the detection signals to come up with the best decision possible. In particular, it is able to exploit the nonlinear relations that exist between the various data inputs.

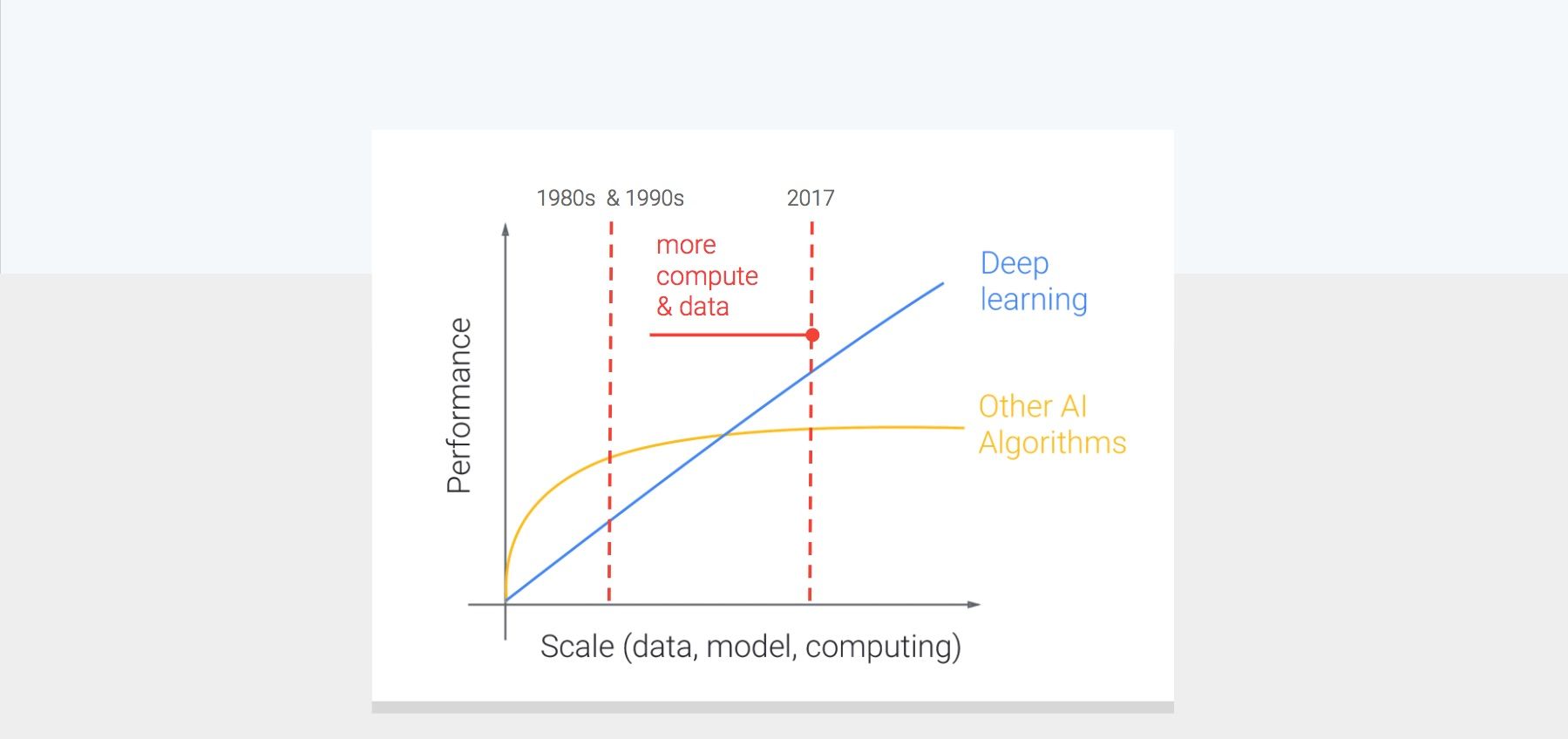

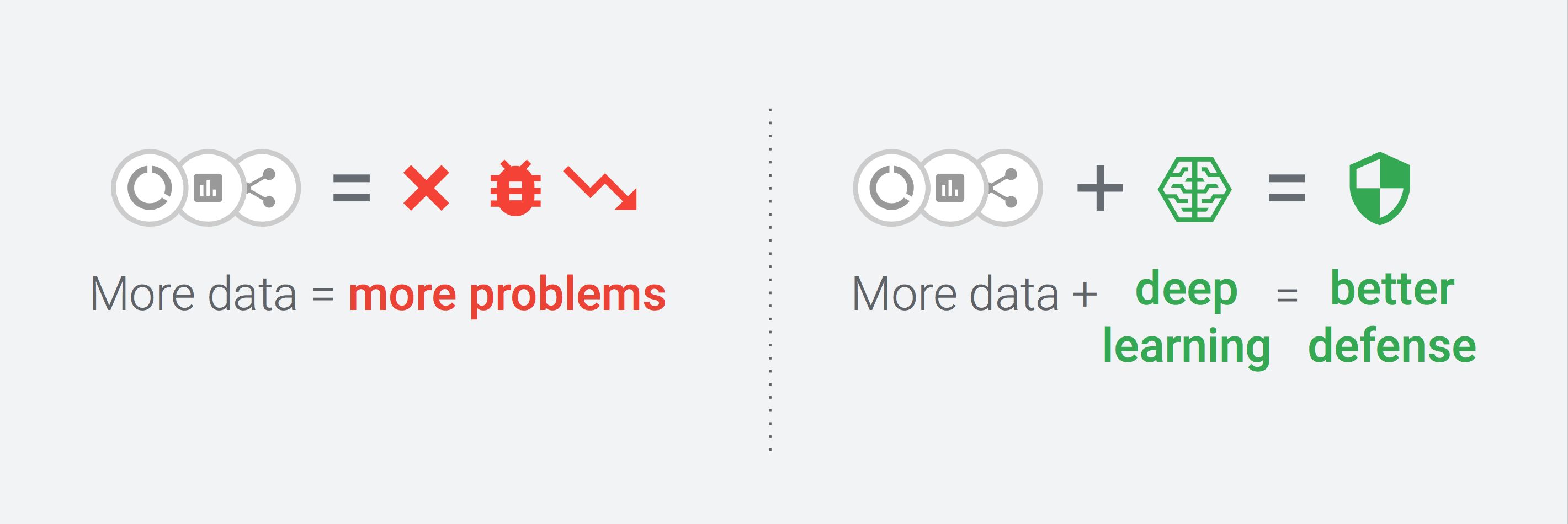

The final piece of the puzzle that explains why AI is overtaking anti-abuse fighting, and many other fields, is the rise of deep learning. What makes deep learning so powerful is that deep neural networks, in contrast to previous AI algorithms, scale up as more data and computational resources are used.

From an abuse-fighting perspective, this ability to scale up is a game changer because it moves us from a world where more data means more problems to a world where more data means better defense for users.

How deep learning helps Gmail to stay ahead of spammers

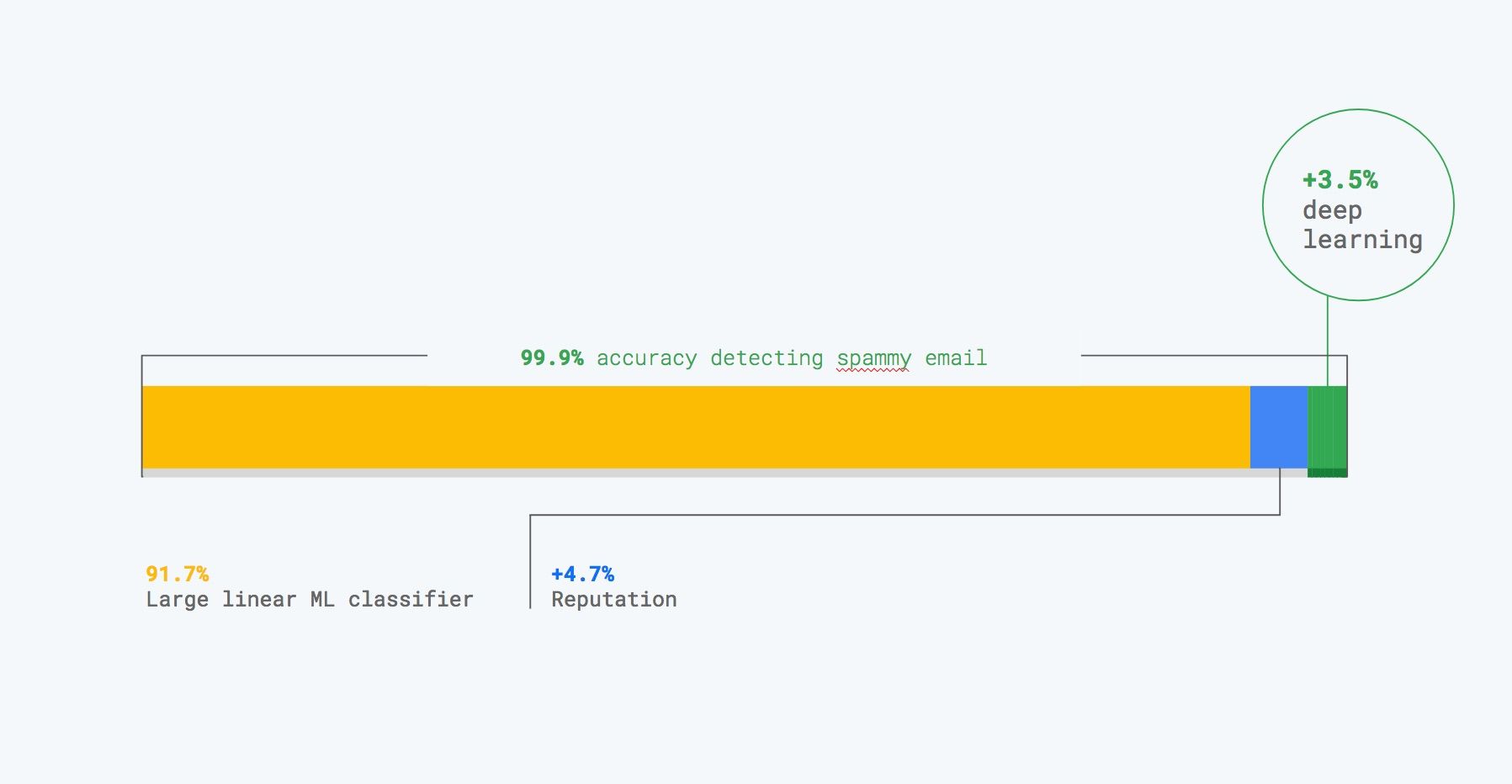

Every week, Gmail’s anti-spam filter automatically scans hundred of billions of emails to protect its billion-plus users from phishing, spam and malware.

The component that keeps Gmail’s filter ahead of spammers is its deep learning classifier. The 3.5% additional coverage it provides comes mostly from its ability to the detect advanced spam and phishing attacks that are missed by the other part of the filter, including the previous generation linear classifier.

Deep learning is for everyone

Now, some of you might think that deep learning only works for big companies like Google or that it is still very experimental or too expensive. Nothing could be further from the truth.

Over the last three years, deep learning has become very mature. Between Cloud APIs and free frameworks, it is very easy and quick to start benefiting from deep learning. For example, Tensorflow and Keras provide a very performant, robust and well-documented framework that empowers you to build state-of-the-art classifiers with just a few lines of code. You can find pre-trained models here, a list of keras related ressources here and one for Tensorflow here.

Challenges ahead

While it is clear that AI is the way forward to build robust defenses, this does not mean that the road to success is without challenges. The next three posts will delve into the top 10 anti-abuse specific challenges encountered while applying AI to abuse fighting, and how to overcome them.

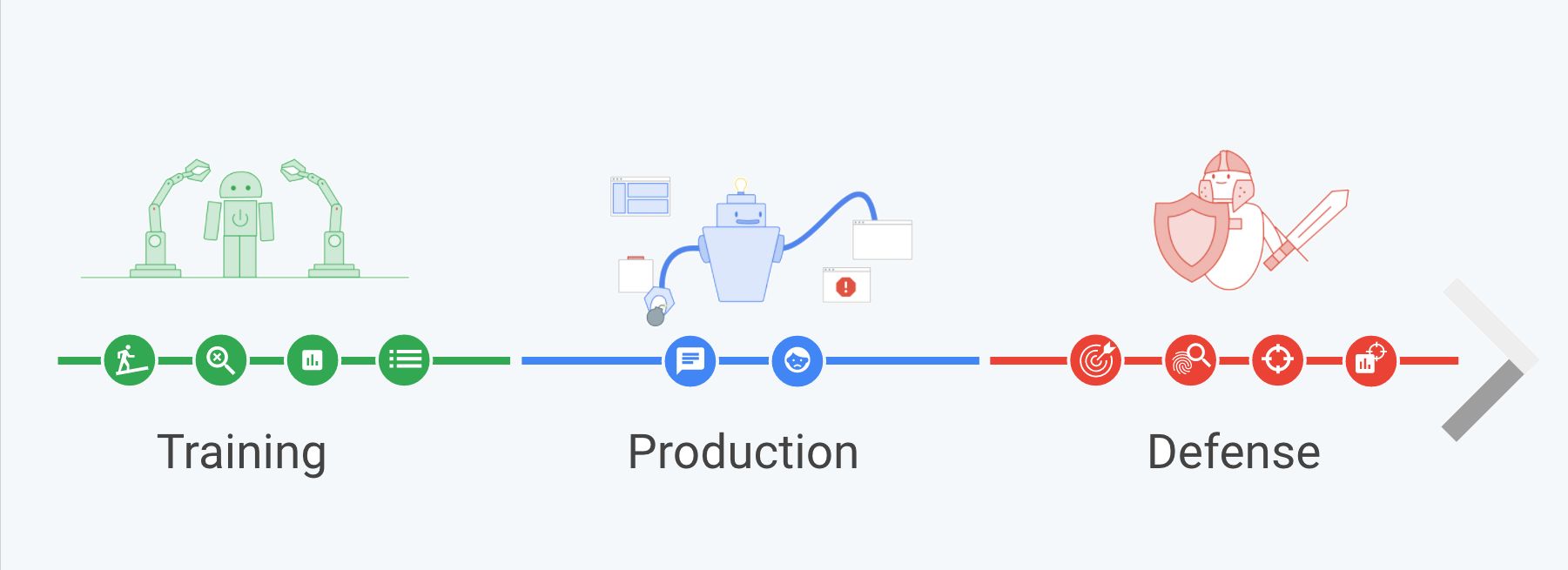

Those 10 challenges are grouped into the following three categories/posts that follow the natural progression of building and launching an AI-based defense system:

- Training: This post looks at how to overcome the four main challenges faced while training anti-abuse classifiers, as those challenges are the ones you will encounter first.

- Classification: This post delves into the two key problems that arise when you put your classifier in production and start blocking attacks.

- Attacks: The last post of the series discusses the four main ways attackers try to derail classifiers and how to mitigate them.

Thank you for reading this post till the end! If you enjoyed it, don’t forget to share it on your favorite social network so that your friends and colleagues can enjoy it too and learn about AI and anti-abuse.

To get notified when my next post is online, follow me on Twitter, Facebook, or LinkedIn. You can also get the full posts directly in your inbox by subscribing to the mailing list or via RSS.

A bientôt!